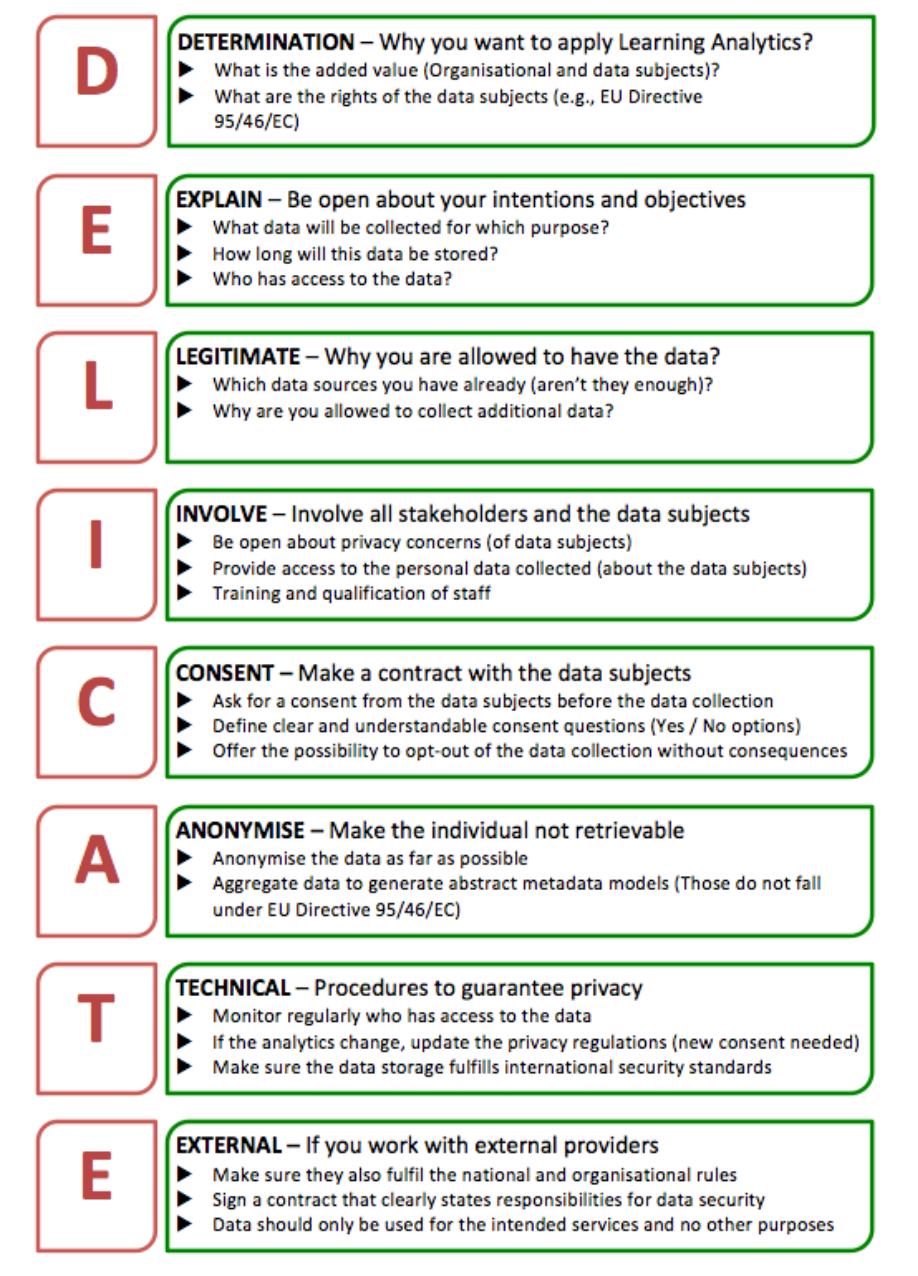

DELICATE Checklist

A Learning Analytics Policy Analysis

Drachsler and Greller’s “Privacy and Analytics - it’s a DELICATE Issue: A Checklist for Trusted Learning Analytics” (2016) provides a thorough and insightful analysis of institutional hesitancy towards learning analytics, from its roots in ethical practice and privacy rights, to the erosion of said rights at the hands of contemporary techno-capitalism. This analysis informs and contextualizes the, incredibly aptly named, DELICATE framework, which serves as a learning analytics policy guideline for institutions weary of LA’s potential pitfalls.

Through a discussion of the history of ethics and privacy in research, the authors demonstrate the absolute necessity for these concepts as foundational to successful learning analytics. Database breaches, secret facebook reaserch, large-scale data collection, and eroding individual privacy are a few of many cited contributors towards a pronounced distrust and evolving “fear” of learning analytics.

This analysis culminates in the presentation of the DELICATE checklist, which aims to empower institutions to implement learning analytics in a way that is collaborative, transparent, responsible, and above-all, with the goal of building trust between learners and the institution. It recognizes that the modern online landscape has cultivated a heightened sensitivity to data collection for any purpose, and thus advocates for, what I perceive to be, a very conservative approach.

While the framework includes common policies such as transparency, responsibility, and consent, it places special emphasis on privacy, anonymity, and security.

It states that data should be made as anonymous as possible, and subject to stringent retention policies. In a world where cyber-attacks on educational institutions are common, these points of the framework are intended to mitigate risk. Most notably, the authors argue that a focus on privacy and anonymity in institutional analytics positions both concepts as a “service offering”. The reasoning appears to be that learners are so accustomed to interfacing with entities that have little respect for their privacy that encountering one who places such a major emphasis on it creates an environment highly conducive to trust.

DELICATE guides institutions to be transparent about their data collection intentions, methods, and goals, and foster a collaborative analytics eco-system, with the aim to subvert the all-too-common sentiment of a 1984-esque surveillance apparatus and replace it with one where all stakeholders benefit.

These policies are intended to allow adhering institutions to establish themselves as what the authors describe as “Trusted Knowledge Organizations” - essentially entities that subvert the “fast and loose” approach to data that has led to an understandably common sentiment of distrust, if not suspicion, towards analytics.

I chose this policy checklist because its authors articulate a conflict on the subject of learning analytics that I find highly relatable. At different points in my life, I have been both the eager analyst as well as the suspicious and distrustful student. I have always struggled to reconcile these two perspectives. I can appreciate the “fear” described by Drachsler and Greller, but also recognize that it is something worth overcoming for institutions. I work at UBC, whose learning analytics (LA) principles are also carefully thought out (Learning Data Committee, 2019). UBC's LA guidelines can be found here. Both UBC’s principles and the DELICATE checklist have significant overlap. I will compare the decidedly conservative approach of DELICATE with the UBC LA purposes and principles document. The DELICATE checklist is intended to guide the creation of something like the UBC LA principles document, so where do they differ?

Though checklists and lists of principles are likely a poor measure of actual practice or implementation, they at least articulate an institution’s general intended approach. UBC LA principles aren’t necessarily missing anything, but are opting for a slightly different approach. Where DELICATE advocates a clearly conservative path for implementation, seeped in the byproducts of EU privacy legislation, the UBC LA principles appear to be written in a way that is a bit more broad and permissive. Make no mistake, “Respect for persons” and “Stewardship and privacy” certainly encompass issues such as anonymity, privacy, bias, and data security principles, but the language used is much more broad than the strict presentation of the DELICATE checklist. They are also not as granular - where DELICATE is explicit about external provider policies, UBC LA principles instead issue more broad statements regarding stewardship.

As discussed, I selected the DELICATE framework due to its attempt to address the tension between the fear of data collection and its potential benefits in learning analytics. But in practice, I have to question whether it is too restrictive of an approach. If anything, it is DELICATE that seems to be missing something when compared with the UBC LA principles. DELICATE contains little on the subject of bias and equity, whereas the UBC LA principles highlight these as key pillars. I don’t believe it is possible to eliminate bias or elevate equity through sheer anonymity and privacy, thus DELICATE begins to feel myopic.

Other criticism of DELICATE exists, such as Kitto and Knight (2019), which note that it advocates to such a degree of anonymization that it likely fails to align with some of the European Union General Data Protection Regulation (GDPR) directives. How can “the right to be forgotten” be implemented without some degree of identification?

This exercise has made me appreciate the principles established for LA in my work context. UBC articulates a responsible, collaborative, and ethical approach to LA, while leaving sufficient breathing room for the its benefits to be fully realized at all levels. The DELICATE framework has a clear purpose - to cultivate trust in institutional LA. Thus it is difficult to argue with their restrictive approach, but I would imagine it could be a significant impediment for performing the granular analyses that can serve to benefit learners at an individual level.

References

Drachsler, H. & Greller, W. (2016). Privacy and analytics - it’s a DELICATE issue. A checklist for trusted learning analytics. In Proceedings of the Sixth International Conference on Learning Analytics & Knowledge (LAK ‘16) (pp. 89-98). ACM.

Kitto, K., & Knight, S. (2019). Practical ethics for building learning analytics. British Journal of Educational Technology, 50(6), 2855–2870. https://doi.org/10.1111/bjet.12868

Learning Data Committee. (2019, July 4). Learning analytics at UBC: Purpose and principles. University of British Columbia. (Original work published 2018)

AI Statement

I used Anthropic’s Claude for a few grammatical and APA styling questions. Consensus AI was useful for searching LA policy research, and highlighted the Kitto and Knight (2019) piece. All prompts and chat output can be shared upon request.